SUMMER 2012 CONTENTS

Home

B!g data

What it means for our health and the future of medical research

Statistically significant

Biostatistics is blooming

King of the mountain

Digging data for a healthier world

A singularity sensation

Author Vernor Vinge talks sci-fi health care

On the records

Tapping into Stanford's mother lode of clinical information

DOWNLOAD PRINTABLE

ISSUE (PDF)

Special Report

B!G Data

What it means for our health and the future of medical research

By Krista Conger

Photo-illustration by Dwight Eschliman

“I think I’m getting sick,” says Michael Snyder, PhD, cheerfully, unbuttoning his shirt cuff and rolling up his sleeve to show me the inside of his elbow.

A ball of white cotton puffs out from under the white tape used to cover the site of a blood draw. Snyder, 57, a lean, intense-looking man who chairs Stanford’s genetics department, doesn’t sound at all upset about his impending illness. In fact, he sounds positively satisfied. “It’s a viral illness; one of the hazards of having young children, I guess,” he says, smiling. “So far I’m only a little sniffly. But it does give me another sample.”

I sat in a chair in his small office, pondering the handshakes we had exchanged moments earlier and surreptitiously wiping my palm on my jeans.

Blood samples are nothing new to any of us. They’re a routine part of medical checkups and give vital information to clinicians about our cholesterol and blood sugar levels, the function of our immune systems, and our production of hormones and other metabolites. The fleeting pinch of discomfort is a small price to pay for such a peek inside our own bodies.

But Snyder’s recently obtained blood sample was destined for a different fate. It was also what had brought me to his office on an unseasonably warm afternoon in February. While the standard blood test panel recommended during routine checkups assesses the presence and levels of only a few variables, such as the total numbers of red and white blood cells, hemoglobin and cholesterol levels, Snyder’s would undergo a much more intense analysis — yielding millions of bits of data for an ultra-high-definition portrait of health and disease.

The unprecedented study, termed an integrative personal genomics profile, or iPOP, generated billions of individual data points about Snyder’s health, to the tune of about 30 terabytes (that’s about 30,000 gigabytes, or enough CD-quality audio to play non-stop for seven years).

It’s a lot of data. And he’s just one man, with just a few month’s worth of samples. Other biological databases that are frequently used, and still growing, include an effort to categorize human genetic variation (more than 200 terabytes as of March) and another to sequence genomes of 20 different types of human cancer (300 terabytes and counting).

Which begs the question: What wonderful things can we do with all this data? How do we store it, analyze it and share it, while also keeping the identities of those who provided the samples private and protecting them from discrimination? Meeting this challenge may be the most pressing issue in biomedicine today.

We’re drowning in data. Supermarkets, credit cards, Amazon and Facebook. Electronic medical records, digital television, cell phones. The universe has gone wild with the chirps, clicks, whirs and hums of feral information. And it truly is feral: According to a 2008 white paper from the market research firm International Data Corp., the amount of data generated surpassed our ability to store it back in 2008. The cat is out of the bag.

But what are we talking about, really? To truly understand the issue, it’s necessary to do a little background work. Computers store data in a binary fashion with bits and bytes. A bit is defined by one of two possible, mutually exclusive states of existence: up or down, for example, or on or off. In computers, the states are represented by 0 and 1. A byte is the number of bits (usually eight) necessary to convey a unit of information, such as a letter of text or a small number on which to perform a calculation. A kilobyte is 1,000 bytes; a megabyte is 1,000 kilobytes.

The numbers pile up relentlessly: giga, tera, peta, exa, zetta, which is 1 sextillion bytes, up to yotta, which is officially too big to imagine, according to The Economist. If you want to try to imagine it anyway, a yottabyte equals about a septillion bytes. You write septillion as a 1 followed by 24 zeroes.

In 2010, the amount of digital information — from high-definition television signals to Internet browsing information to credit card purchases and more — created and shared exceeded 1 zettabyte for the first time. In 2011, it approached 2. The amount has grown by a factor of nine in five years, according to IDC, which pointed out in its 2011 report that there are “nearly as many bits of information in the digital universe as stars in our physical universe.”

In March, the federal government announced the Big Data Research and Development Initiative — a $200 million commitment to “greatly improve the tools and techniques needed to access, organize and glean discoveries from huge volumes of digital data.” In particular, the National Institutes of Health and the National Science Foundation are offering up to $25 million for devising ways to visualize and extract biological and medical information from large and diverse data sets like Snyder’s study, and simultaneously the NIH announced it would provide researchers free access to all 200 terabytes of the 1,000 Genomes Project — an attempt to catalog human genetic variation — via Amazon Web Services.

Successfully managing the “data deluge” will allow scientists to compare the genomes of similar types of cancers to identify how critical regulatory pathways go awry, to ferret out previously unknown and unsuspected drug interactions and side effects, to precisely track the genetic changes that have allowed evolving humans to populate the globe, and even to determine how our genes and environment interact to cause obesity, osteoporosis and other chronic diseases.

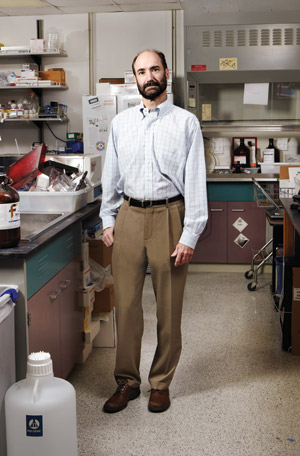

Photo by Colin Clark

Mike Snyder, chair of genetics, has amassed billions of data points in his health profile.

Mike Snyder’s “omics study” is still only a tiny fraction of this total. But the iPOP represents on a small scale the complex challenges of accumulating, storing, sharing and protecting biological and medical data — as well as the need to extract useful clinical information on an ongoing basis. And we’d better figure out what we’re doing.

“This is going to be standard medical care,” says Snyder. “It will change the way we practice medicine.” If he is right, we’re looking at the ongoing accumulation of terabytes of health information for each of us during our lifetimes.

That’s because the study’s outcome (published in Cell in March) is like nothing medicine has ever seen before. It’s a series of snapshots that show how our bodies use the DNA blueprints in our genomes to churn out RNA and protein molecules in varying amounts and types precisely calibrated to respond to the changing conditions in which we live.

The result is an exquisitely crafted machine that turns on a dime to metabolize food, flex muscles, breathe air, fight off infections and make all the other adjustments that keep us healthy. A misstep can lead to disease or illness; understanding this dance could help predict problems before they start.

So where’s all this data coming from, and where do we keep it? Great leaps in scientific knowledge have almost always been preceded by improvements in methods of measurement or analysis. The invention of the optical microscope opened a new frontier for biologists of the early to mid-1600s; the telescope performed a similar feat for astronomers of the period. Biologists of the 20th century have their own touchstones, including several developed at Stanford: the invention of the fluorescence-activated cell sorter around 1970 by Leonard Herzenberg; the invention of microarray technology in Patrick Brown’s and Ronald Davis’s labs in the mid-1990s, and the recent development of ultra-high-throughput DNA sequencing technology and microfluidic circuits by bioengineer Stephen Quake. Each of these technological leaps sparked rapid accumulation of previously unheard of volumes of data — and a need for some place to store it.

One of the first publicly available databases, Genbank, started in 1982 as a way for individual researchers to share DNA sequences of interest. In November 2010, the database, which is maintained by the National Center for Biotechnology Information, contained hundreds of billions of nucleotides from about 380,000 species. It has doubled in size approximately every 18 months from its inception until 2007.

Other databases have sprung up over the years, including OMIM, or Online Mendelian Inheritance in Man, which catalogs the relationship between more than 12,000 genes and all known inherited diseaseand GEO, or Gene Expression Omnibus, which contains over 700,000 RNA sequences and microarray data that illuminate how the instructions in DNA are put into action in various tissues and at specific developmental times.

But we can’t stop there. It’s also necessary to know how gene expression is affected by outside forces like diet or environment, and how the protein product of that gene interacts with the hundreds or thousands of other proteins in the cells to achieve a certain biological outcome. And then there’s the issue of time, which, according to Douglas Brutlag, PhD, a bioinformatics expert and emeritus professor of biochemistry and medicine at Stanford, is “the worst dimension of all.”

“Ideally, we would like to have a database of all human protein-coding genes, of which there are about 22,000,” says Brutlag. “We’d include information about their levels of expression in every tissue (about 200) and in every cell type (about 10 to 20 in each tissue). And then we’d need to know exactly when these genes were expressed in each of these locations during development. You start multiplying these variables together, and then you see, ‘Now that’s big data.’” It can also be a big headache.

When he first proffered his arm in January 2010, Mike Snyder wasn’t thinking of the volume of data his iPOP study might generate. He was focused mostly on the type of information he and his lab members would receive.

'We’ve been so focused on generating hypotheses, but the availability of big data sets allows the data to speak to you. Meaningful things can pop out that you hadn’t expected.'

“Currently, we routinely measure fewer than 20 variables in a standard laboratory blood test,” says Snyder, who is also the Stanford W. Ascherman, MD, FACS, Professor in Genetics. “We could, and should, be measuring many, many thousands. We could get a much clearer resolution of what’s going on with our health at any one point in time.” But the process is constrained by habit and infrastructure — standard medical labs are not set up to do anything like Snyder’s iPOP, and many primary care physicians would have no idea what to do with the information once they had it. It’s also expensive: It costs about $2,500 to extract all the bits of information from each of Snyder’s blood samples.

The first step of Snyder’s iPOP was relatively simple: He had his whole genome sequenced. What was a daunting task fewer than two decades ago is now a straightforward, rapid procedure with a cost approaching $1,000. Furthermore, the whole-genome sequence of any one individual doesn’t actually take up that much room in our universe of digital storage. The final reference sequence compiled by the Human Genome Project can be stored in a modest 3 gigabytes or so — a small portion of a single hard drive.

But while pulling out differences between a sequence from an individual like Snyder and the reference sequence is simple, ascertaining what those differences mean is another matter entirely. The layers of annotation, describing what genes are involved in which processes, add complexity. For that, it’s necessary to understand which variation is normal, which is inconsequential and which might be associated with disease. Here, the field of bioinformatics can help.

Bioinformaticians marry computer science with information technology to develop new ways to analyze biological and health data. The field is one of the most rapidly growing in medicine and includes Stanford researchers Atul Butte, MD, PhD, and Russ Altman, MD, PhD, each of whom has developed computerized algorithms to analyze publicly available health information in large databases. These algorithms enable researchers to approach the data without preconceived ideas about what they might find.

“We’ve been so focused on generating hypotheses,” says cardiologist Euan Ashley, MD, “but the availability of big data sets allows the data to speak to you. Meaningful things can pop out that you hadn’t expected. In contrast, with a hypothesis, you’re never going to be truly surprised at your result.” Hypothesis-driven science isn’t dead, say many scientists, but it’s not the most useful way to analyze big data sets.

Ashley and colleagues, including Snyder, Altman and Butte, have designed a RiskOGram computer algorithm designed to incorporate information from multiple disease-associated gene variants in an individual’s whole-genome sequence to come up with an overall risk profile. They first used the algorithm to analyze the genome of Quake, their bioengineering colleague. Since then it’s been used on whole-genome sequences from several additional people, including the family of entrepreneur John West and, most recently, Snyder himself. Together, Altman, Ashley, Butte, West and Snyder have launched a company called Personalis to apply genome interpretation to clinical medicine.

When thinking about the storage of sequencing data, however, there’s also the issue of sequencing “depth,” which essentially means the number of times each DNA fragment is sequenced during the procedure. Repeated sequencing reduces the possibility of error. Extremely deep sequencing, like that employed with Snyder’s genome (each nucleotide was sequenced, or “covered,” an average of 270 times) comes at a price beyond dollars; it generates a plethora of raw data for storage and analysis. In comparison, the Human Genome Project provided about eightfold coverage of each nucleotide in the reference sequence.

Finally, today’s sequencing technologies don’t sequence an entire chromosome in one swoop, but first break it into millions of short, random fragments. This leads to another type of biological data to store. After each fragment is sequenced, computer algorithms assemble the small chunks into chromosome-length pieces based on bits of sequence overlap among the fragments. It’s necessary to save the raw data to closely investigate any discrepancies in critical disease-associated regions.

What’s it all add up to? Well, a “normal” genome might require only a few gigabytes to store. But Snyder’s, with the deep coverage, raw data and extensive annotation, takes up a whopping 2 terabytes, or about 2,000 gigabytes — plenty of fodder for the RiskOGram.

“I was amused to see type-2 diabetes emerging so strongly,” says Snyder of the results of the analysis, who, despite his fondness for cheeseburgers and mint chocolate chip ice cream had no known risk factors or family history of the disease. In contrast, he was lean and relatively active. Despite this, the RiskOGram predicted that his risk of developing type-2 diabetes was 47 percent, which is more than double that of other men his age.

Snyder also learned he had a genetic predisposition to basal cell carcinoma and a propensity for heart disease (a finding that caused him to start cholesterol-lowering medication). That last finding wasn’t unexpected; many family members on his father’s side had died from heart failure.

What Snyder was doing was, in principle, not unusual. It’s just very thorough. Companies like 23andMe allow consumers to send in a cheek swab and receive a report of disease risk based on regions of genetic variation called single nucleotide polymorphisms, or SNPs. Unlike whole-genome sequencing, tests of this type scan the genome for the presence of variations already known to affect health or disease risk.

As a precaution, Snyder decided to include regular blood sugar level tests as part of his iPOP — high glucose indicates diabetes. He also scheduled a meeting with Stanford endocrinologists and diabetes experts Sun Kim, MD, and Gerald Reaven, MD, who suggested a rigorous three-hour fasting blood sugar test. Snyder agreed, but his hectic schedule delayed the test by about five months. A more pressing issue was how to upload and store the information generated by his iPOP to the appropriate public databases. The sheer volume of the data complicated the task enormously.

“The hard part was submitting all the data,” says Snyder. “Transferring these large files takes time. The whole process of submission took several weeks.” Since the publication of Snyder’s iPOP study, many other researchers have requested access to the raw data. In most cases, Snyder says, it’s been far easier to simply ship a hard drive containing the data to the requesters rather than transmit them electronically.

Since the inception of Genbank and OMIM, federal biological databases have gotten progressively more ambitious. The 1,000 Genomes Project, for example, aims to curate and catalog naturally occurring differences in the genomes of 2,600 ethnically diverse humans (as of March, the project had collected more than 200 terabytes of data on about 1,700 humans, all publicly accessible).

And then there is the Cancer Genome Atlas, or TCGA — an effort to sequence the entire, error-riddled genome of tumor cells from 20 human cancers. Scientists involved in the effort, funded by the National Cancer Institute and the National Human Genome Research Institute, have sequenced the genes from more than 1,000 tumor genomes. Furthermore, researchers typically collect two genomes from each patient: one from normal tissue and one from the tumor. The TCGA project does very deep sequencing of the genes in both samples, coupled with complete genome sequencing for many samples. Total data produced as of May 2012 is more than 300 terabytes.

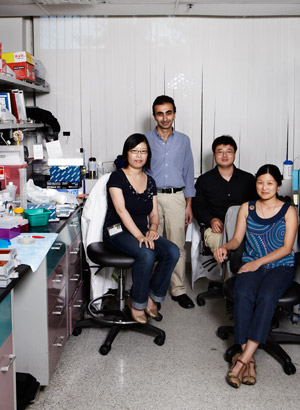

Photo by Colin Clark

L to R: Lihua Jiang, George Mias, Rui Chen and Jennifer Li-Pook-Than co-authored the paper describing the analysis of Mike Snyder’s health data.

“Just moving these files from one institution to another is a major headache,” says David Haussler, PhD, a distinguished professor of biomolecular engineering at the University of California-Santa Cruz and a co-principal investigator of one of TCGA’s seven Genome Data Analysis Centers. He and his colleagues are developing new algorithms and software to transfer and analyze the resulting data.

In May, Haussler’s Santa Cruz group announced the completion of the first step in the construction of the Cancer Genomics Hub — a large-scale data repository and user portal for the National Cancer Institute’s cancer genome research programs. Based in San Diego, the hub will support not just TCGA, but also two related large-scale projects: one (called TARGET) focused on five childhood cancers and another (called CGCI) focused primarily on HIV-associated cancers.

“In addition to being one of the genome data analysis centers for TCGA, our Cancer Genomics Hub will store the sequences themselves,” says Haussler. “All of these data will be gathered into one place and made available to researchers.”

Comparing the genomes of thousands of cancers will allow researchers to distinguish meaningful differences in the hundreds of mutations harbored by each tumor and to develop therapies that target these mutations. It’s critical to cancer research because the immense variation among and even within individual cancers stymies traditional research conducted on panels of tumors handily available at a scientist’s institution. Instead it’s necessary to compare thousands or tens of thousands of tumors to identify mutations that drive the disease and its response to therapy.

Haussler and his collaborators designed the San Diego facility to handle 5 petabytes of information and to be scalable to handle 20. “In the last few years the TCGA project has produced more data than the combined total of all other DNA sequencing efforts accumulated by NCBI from its inception as Genbank in 1982 until January of 2012,” says Haussler. “TCGA will generate orders of magnitude more data beyond this. If we had the money, we’d certainly be sequencing multiple biopsies, or, perhaps one day, even individual cells from each tumor.”

According to Haussler, the amount of information generated by the researchers working on the TCGA project is comparable to that generated by the Large Hadron Collider particle accelerator. “In cases like this, it’s much simpler to bring the computation to the data, than the other way around,” says Haussler. In many cases he and his colleagues suggest that researchers interested in conducting repeated studies co-locate some of their computing equipment in the San Diego Supercomputer Center alongside the Cancer Genomics Hub — a riff on Snyder’s smaller-scale solution of mailing hard drives to researchers. “Researchers bringing computation to the data either run analysis on their own custom computing platforms located near the data or ‘rent’ time on generic facilities — the cloud computing model,” says Haussler. Cloud computing — in this case, hosting the computation on server farms that have access to the data — could be the answer for some databases. Security and privacy concerns could be a roadblock to cloud computing for some uses, especially when patient information is involved.

“Yes, there are a lot of obstacles,” says Haussler. “Chief among them are the sheer size of the data involved, the issues of privacy and compliance, and the natural reluctance of some researchers to share their data with one another. But dammit, we’re overcoming them. It’s getting better all time.”

“There’s no way you have diabetes,” Sun Kim told Snyder in February 2011 as the two walked back to a phlebotomy chair at Stanford Hospital & Clinics. Snyder’s packed schedule had finally allowed him to squeeze in the three-hour fasting blood sugar test recommended by Reaven. Snyder agreed. He’d been performing quick tests of his own levels for months by that time.

“Sometimes I fasted, sometimes I didn’t,” says Snyder of the tests. “It didn’t seem to matter. My glucose levels were always very normal, even when I’d just eaten a cheeseburger and fries for lunch. There was never any indication of a problem.”

And his overall health had remained good, with the exception of a recent viral illness he probably contracted from one of his two daughters, Emma (now age 11) and Eve (age 6). (He’d missed several days of work as a result.) But he pressed forward in the name of science.

“There are some indications in my genome that I may be at risk,” he reminded Kim, while settling into the chair. “Let’s go ahead with the test.”

And then the real surprises started.

Snyder’s initial blood glucose level was 127. Normal fasting glucose levels range between 70 and 99 milligrams per deciliter.

“We were both really surprised,” says Snyder. “I didn’t have my data sheets with me at the time, but I knew it had always been quite low. So Kim repeated the analysis.” The result was the same. The three-hour test requires the patient to drink a sugary drink and give three subsequent readings as the body metabolizes the sudden influx of sugar. The results showed that, although Snyder’s initial blood sugar levels were high, he was still able to metabolize the sugar normally. But that jarring initial reading lingered in Snyder’s mind.

At that time, Snyder’s iPOP, or “personal ‘omics’ profile,” study had been going for about nine months. The word “omics” indicates the study of a complete body of information, such as the genome (which is all DNA in a cell), or the proteome (which is all the proteins). Snyder’s iPOP also ncluded his metabolome (metabolites), his transcriptome (RNA transcripts) and autoantibody profiles, among other things. It’s a dynamic, ongoing analysis that accumulates billions of additional data points with each blood sample he contributes. He was contributing a sample for analysis about every two months, more often when he became ill.

With each sample Snyder gave (about 20 in all over the course of the initial study, and 42 samples as of early June 2012), the researchers took dozens of molecular snapshots, using different techniques, of thousands of variables and then compared them over time. The composite result was a dynamic picture of how his body responded to illness and disease.

Some of these snapshots included sequencing the RNA transcripts that carry the instructions encoded by DNA in a cell’s nucleus out to the cytoplasm to be translated into proteins. Par for the course, the researchers completed this task at an unheard-of level of detail, sequencing 2.67 billion snippets of RNA over the course of the study. These sequences allowed them to see what proteins or regulatory molecules Snyder’s body was producing at each time point and, similar to looking at a list of materials at a construction site, to infer what was going on inside his cells. Overall, the researchers tracked nearly 20,000 distinct transcripts coding for 12,000 genes — each submitted to the GEO database — and measured the relative levels of more than 4,000 proteins and 1,000 metabolites in Snyder’s blood. In particular, they measured the levels of several immune molecules called cytokines, which changed dramatically during periods of illness like the one he’d experienced two weeks before his fasting glucose test.

Snyder believed in submitting his data to public databases because it stands to help other investigators. In many cases it’s also required prior to scientific publication of any subsequent results. But private databases have proven they can also facilitate research. According a press release from 23andMe in June 2011, the company had approximately 100,000 customers, of which more than 76,000 had consented to have their de-identified information used for scientific research. For example, scientists compared data from over 3,000 patients with Parkinson’s disease with those of nearly 30,000 existing 23andMe clients without the disease. They found two novel, previously unsuspected gene variants associated with the disorder.

“The fact that we are searching these genomes for recurring patterns without any preconceived notions about what we might find is what’s so fundamentally important about this approach,” says Haussler. “In this way, we are able to recognize any gene that’s recurrently mutated in cancer, for example, regardless of any expectations we may have.”

The approach works well for other databases too. Stanford’s Altman, MD, PhD, a professor of bioengineering, of genetics and of medicine, took a similar tack to reveal unexpected drug side effects and drug-drug interactions by using the Food and Drug Administration’s Adverse Event Reporting System database and a Stanford database of patient information called STRIDE. [See story, page 28.] The Veterans Affairs Administration’s VistA electronic health information system, which contains de-identified information on millions of patients, is also a valuable source of data for researchers.

Big data of all sorts — particularly of the type generated by Snyder’s iPOP — is important to researchers seeking to understand the complex, interconnected dance of the thousands of molecules in each cell. It’s a new type of research known as systems biology.

“We got stuck for a while in the idea of direct causality,” says Ashley, the cardiologist. “We thought we could turn one knob and measure one thing. Now we’re trying to really understand the system. We’re developing an appreciation for the 10,000 things that happen on the right when you poke the network on the left.” Ashley estimates that there are about 4.3 X 10^67, or 43 “unvigintillion” (that’s 43 followed by 66 zeros) possible 20-member gene networks — that is, collections of pathways or actions that accomplish similar goals — within a cell.

Seattle-based nonprofit SAGE Bionetworks, started by Stephen Friend, MD, PhD, and bioinformatics whiz Eric Schadt, PhD, aims to help individual researchers tackle systems biology by pooling their data and developing shared computer algorithms to analyze it. It’s created Synapse, an online service that the company bills as an innovation space, providing researchers with a web portal, data analysis tools and scientific communities to encourage collaboration.

Snyder started the iPOP as a way to apply the complex concepts of systems biology to clinical outcomes. But at the moment, he was primarily concerned with just one number: his hemoglobin A1C, which is a more sensitive analysis of glucose levels over a period of weeks. One week after his fasting blood sugar test, he found that his hemoglobin A1C level was also elevated: 6.4 percent (normal ranges are between 4 and 6 percent for non-diabetic people). “At this point, my wife was starting to get a little concerned,” says Snyder.

As was he. Snyder called his mother, a retired schoolteacher in Pennsylvania, to ask a few more questions. She recalled that his grandfather had been diagnosed with high blood sugar levels late in his life. But that seemed minor. “He cut out desserts, and lived to be 87 years old,” says Snyder. All in all, nothing that would automatically raise red flags. But there was that initial fasting glucose level. And the elevated A1C.

So he lined up an appointment with his regular physician about six weeks later. “She, too, took one look at me when I walked in and said, ‘There’s no way you have diabetes,’” says Snyder. But his hemoglobin A1C level at that appointment was 6.7, crossing the threshold for diagnosis. He was diabetic.

“I remember getting the confirmation with the second A1C test,” says Snyder. “It left me very conflicted. Scientifically, it was quite interesting, but personally it’s not news anyone wants to hear. I had a serious health issue for the first time in my life.”

There was never any question that Snyder would submit his personal health information to public databases like Genbank and GEO. Of course he would. And he wasn’t particularly worried about privacy. He has tenure, and relatively good health insurance benefits. But when he visited his primary care physician, he crossed a line. Now his diabetes diagnosis and its implications were medically codified.

“My wife is 10 years younger than I am,” says Snyder, “and we have two young daughters.” As a result, she began looking into increasing his life insurance policy.

“The life insurance company quoted her an additional $7,000 fee because of my physician’s diabetes diagnosis,” says Snyder. “When my wife asked what would happen if I were able to get my glucose levels down, they told her it didn’t matter. Once you’re diabetic, you’re always diabetic, in their eyes. Lower glucose levels just means you’ve managed your disease.”

Even people with identified genetic risk but no diagnosis may run into trouble: Although the 2008 passage of the Genetic Information Nondiscrimination Act prohibits health insurance companies and employers from discriminating on the basis of genetic information, no such protection exists when it comes to life insurance or long-term disability.

One week after his fasting blood sugar test, he found that his hemoglobin a1c level was also elevated. ‘at this point, my wife was starting to get a little concerned.’

The issue illustrates the delicate balance that must be struck when using biological and health information in clinical decision making and research and shows why many people may be leery about consenting to share their private data. Conversely, researchers working with big data can often find themselves bushwhacking through what seems like an impenetrable thicket of consent forms and privacy concerns.

“Working through issues of compliance with the National Institutes of Health for the data in the TCGA Cancer Genome Hub was like nothing I’ve ever dealt with before,” says Haussler. “Until we come up with an acceptable, more flexible compliance infrastructure, it’s going to be very challenging to move forward into cloud computing.” Issues include how to ascertain who should be able to access the data, and how anonymity can be preserved in the face of genomic sequencing that, in its very nature, identifies each participant.

But things may be about to change. Several groups, including the nonprofit SAGE, are working on a standardized patient consent form. This will allow people contributing personal health information to shared databases to give a single consent to a plethora of research activities conducted on their pooled, anonymous data. Sometimes also called portable legal consent, the effort is essential to streamline research while also protecting patient privacy and prohibiting discrimination. Another effort, MyDataCan, based at Harvard, aims to provide a free repository for individual health data. Using the service, a participant can pick and choose which research activities to grant access to their personal data.

Conversely, pending legislation in the California Senate called the Genetic Information Privacy Act would squelch research by requiring consent by individuals for every research project conducted on their DNA, genetic testing results and even family history data, according to Nature magazine. Without such authorization, researchers would have to destroy DNA samples and data after each study — making it impossible for scientists to use genetic databases for anything other than the original purpose for which the information was gathered.

Although it’s clear that issues of privacy still need to be addressed, it’s equally clear that public access to this and other types of biological data can be very useful. And it’s not just researchers who can contribute.

Crowdsourcing is a buzzword that’s become reality in some fields of biology. One example of crowdsourcing, FoldIt, is a game developed by the Center for Game Science at the University of Washington that allows anyone with a computer to solve puzzles of protein folding. Tens of thousands of nonscientists around the world have rejiggered protein chains into a nearly infinite number of possible structures.

Stanford-designed EteRNA is another example: Players design and fold their own RNA molecules. And Atul Butte mentors high school and college students in his lab as they use information from publicly available tumor databases to identify important genes and pathways. [See story, King of the mountain]

It’s a new era — one in which the public and scientists collaborate to plumb vast amounts of data for biological and medical insights. The authors of scientific papers using big data increasingly number in the tens or even hundreds as researchers around the world pool their data and work together to find meaningful outcomes. The issue of who stands to benefit commercially from the results of such research is also unresolved — in May, 23andMe filed a patent related to its discovery of one of the two gene variants associated with Parkinson’s disease, to the consternation of some research participants and scientists who feel that the company is looking to benefit financially from data freely shared by participants. The company, in turn, argues that such patents are necessary to encourage drug development that leads to new therapies for patients. But, regardless of legal tussles, the results of such analyses stand to affect individuals like you and me in a way never before imagined.

Mike Snyder doesn’t eat ice cream anymore. Gone are his trips to snag candy bars from hallway vending machines and the lunches of burgers and fries. He went cold turkey on April 13, 2011. He rides his bike to work nearly every day, and has taken up running again. He lost 15 pounds from his already lanky frame, but for more than two months his blood glucose levels didn’t budge. He began to fear that he would have to go on medication.

But finally, last November, Snyder’s blood glucose levels returned to normal. According to his life insurance company, he’s still diabetic — his condition possibly brought on as a result of his genetic predisposition and the added physiological stress of the viral infection that preceded his diagnosis. But so far he’s managed to dodge the organ and tissue damage caused by excess blood sugar, and the side effects of medications. It’s entirely possible that his iPOP, and its attendant 30 terabytes of data, saved his life. It also gave him new insight into how big data is likely to affect both doctors and patients.

“We’ve trained doctors to be so definitive in the way they treat patients,” he says. “Every medical professional I encountered said there was no way I could have diabetes. But soon the volume of available data is going to overwhelm the ability of physicians to be gatekeepers of information. This will absolutely change how we do medicine.”